Abstract

Collecting fully annotated image datasets is challenging and expensive. Many types of weak supervision have been explored: weak manual annotations, web search results, temporal continuity, ambient sound and others. We focus on one particular unexplored mode: visual questions that are asked about images. The key observation that inspires our work is that the question itself provides useful information about the image (even without the answer being available). For instance, the question "what is the breed of the dog?" informs the AI that the animal in the scene is a dog and that there is only one dog present. We make three contributions: (1) providing an extensive qualitative and quantitative analysis of the information contained in human visual questions, (2) proposing two simple but surprisingly effective modifications to the standard visual question answering models that allow them to make use of weak supervision in the form of unanswered questions associated with images and (3) demonstrating that a simple data augmentation strategy inspired by our insights results in a 7.1% improvement on the standard VQA benchmark.

There are three tasks described in the paper:

1. Image Descriptions

We analyze whether the visual questions contain enough information to provide an accurate description of the image using the Seq2Seq model.

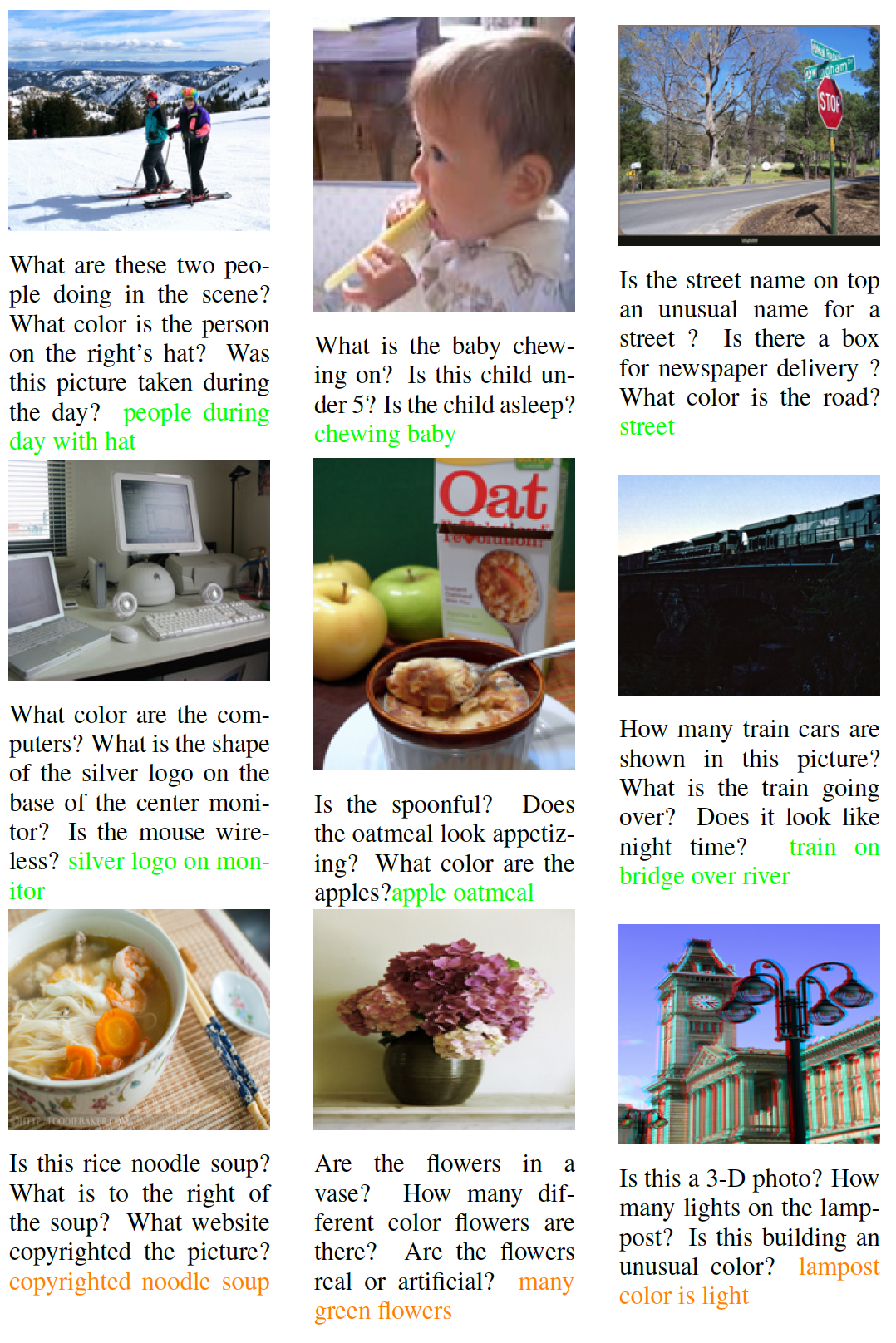

Three visual questions and the caption generated from them using the Seq2Seq model. Some captions are surprisingly accurate (green) while others less so (orange).

2. Object Classification

Visual questions can provide information about the object classes that are present in the image. E.g., asking “what color is the bus?” indicates the presence of a bus in the image.

3. Visual Question Answering

Visual Question Answering is, given an image and a natural language question about the image, the task is to provide an accurate natural language answer. Visual questions focus on different areas of an image, including background details and underlying context. We utilize not just the target question, but also the unanswered questions about a particular image.

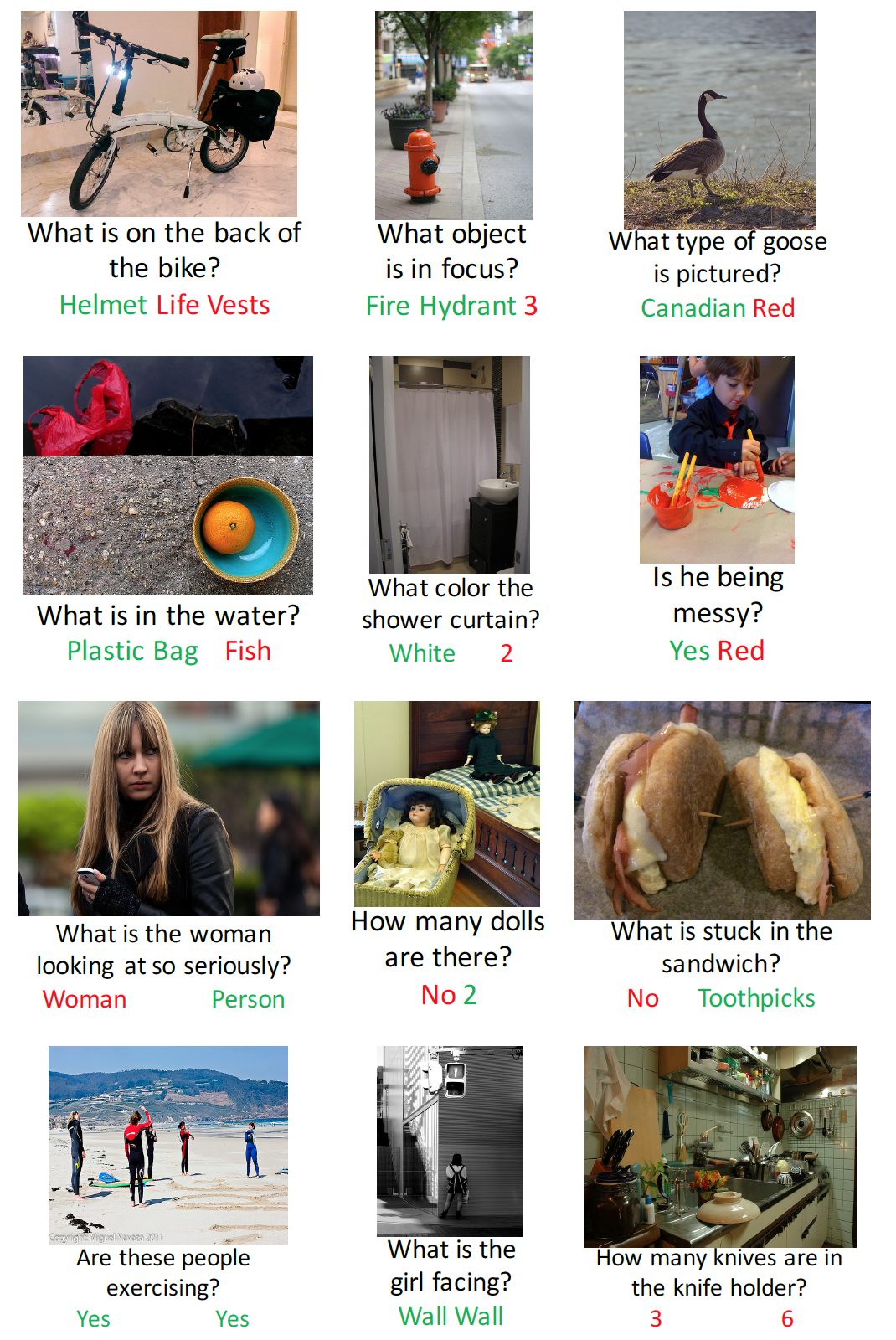

Qualitative comparison of our iBOWIMG-2x

(left) and the baseline iBOWIMG

(right) results. Correct answers in green; wrong answers in red.